Looking into a humanoid robot’s eyes could impact your decision making, according to a recent research article published by scientists from Istituto Italiano di Tecnologia (IIT) in Genova, Italy.

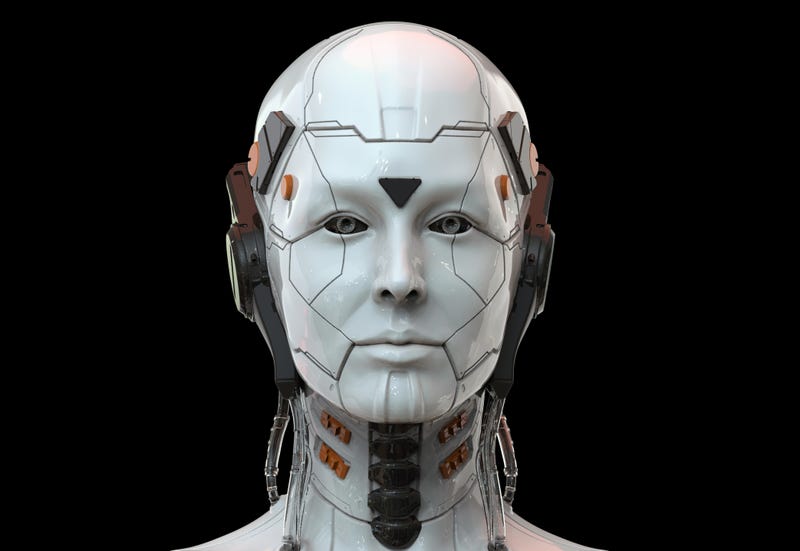

For the study, the researchers had participants play a strategic game against with the IIT’s “iCub” robot, which has a human-like face with large eyes. While participants played the game, the researchers measured their behavior and neural activity using electroencephalography.

According to Reuters, there were 40 participants in the study and they played the automobile-focused video game “chicken” with the iCub robot.

“In most everyday life situations, the brain needs to engage not only in making decisions but also in anticipating and predicting the behavior of others,” said an abstract about the project. “In such contexts, gaze can be highly informative about others’ intentions, goals, and upcoming decisions.”

When the iCub robot established mutual gaze before a participant had to decide something, the participants were slower to respond than when the robot had an averted gaze, the study found.

“This was associated with a higher decision threshold in the drift diffusion model and accompanied by more synchronized EEG alpha activity,” explained the abstract.

Researchers found that the study participants “reasoned about the robot’s actions” both when the robot’s eyes appeared to be looking at them and when the robot’s eyes were turned away. However, the participants who dealt with robots that mostly averted their gaze “were more likely to adopt a self-oriented strategy” and had neural activity with higher sensitivity to outcomes.

“Together, these findings suggest that robot gaze acts as a strong social signal for humans, modulating response times, decision threshold, neural synchronization, as well as choice strategies and sensitivity to outcomes,” said the abstract.

The IIT researchers believe their findings wide ranging implications related to human-robot interaction, including determining when humans might want to have responses such as the feelings participants had towards iCub’s gaze and when they might not.

“Once we understand when robots elicit social attunement, then we can decide it which sort of context this is desirable and beneficial for humans and in which context this should not occur,” said IIT researcher Agnieszka Wykowska, according to Reuters.